Are you looking for trouble?

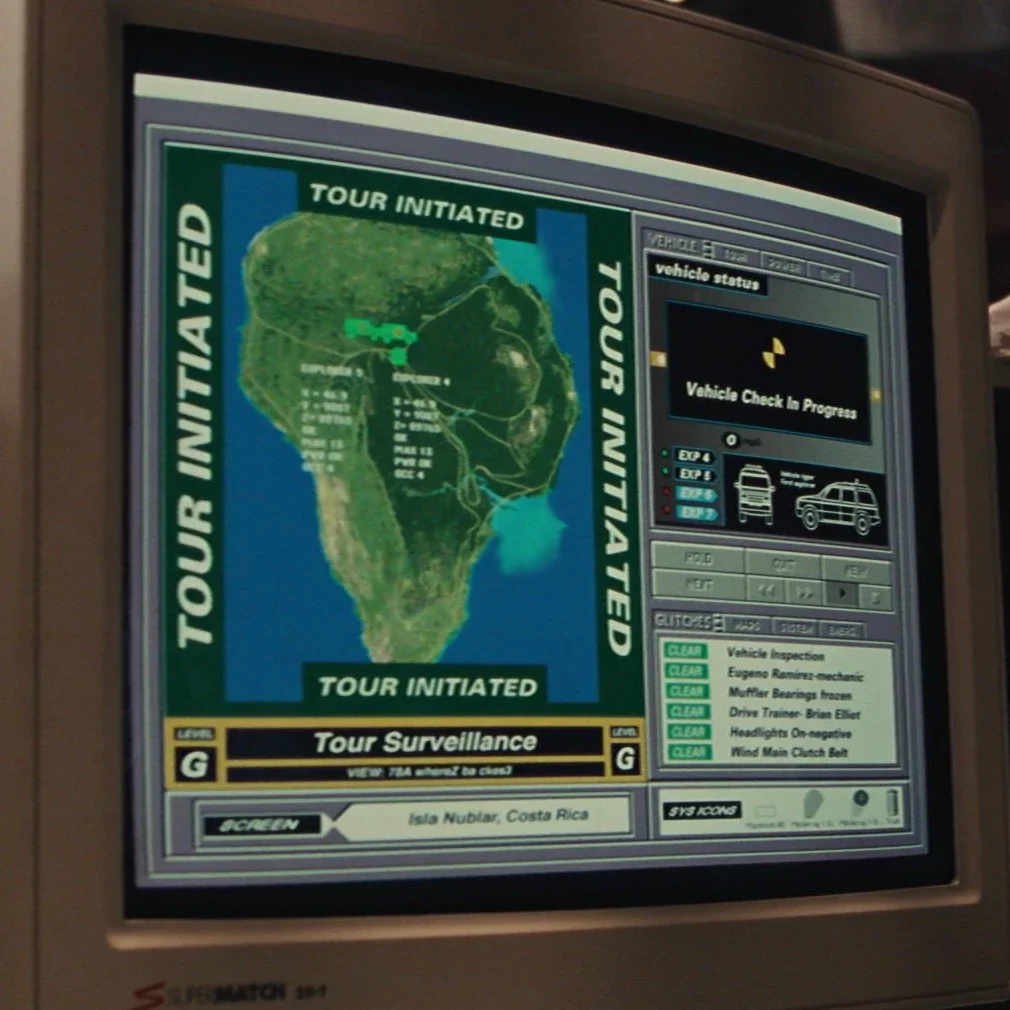

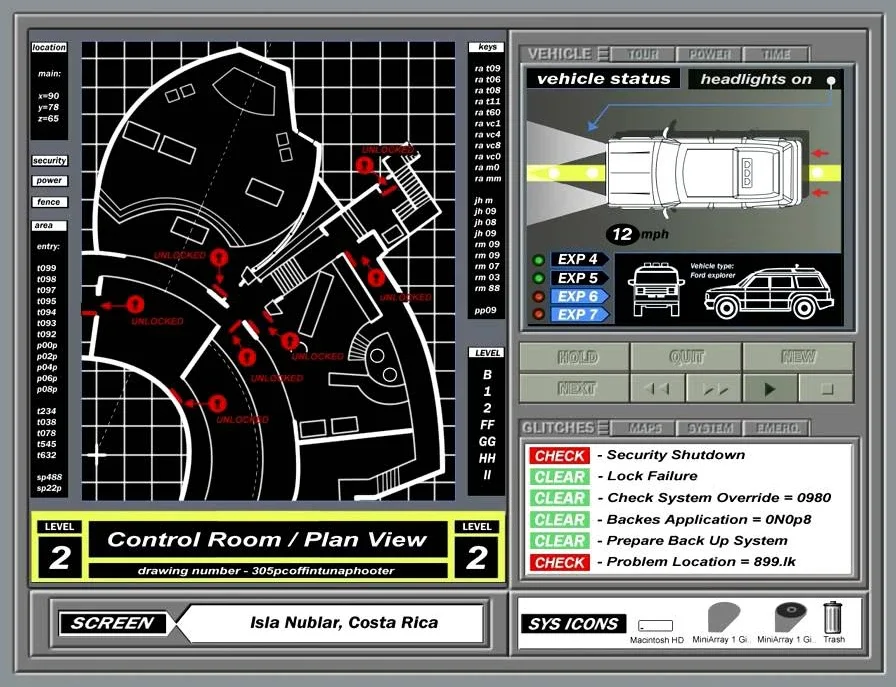

Do you know where your servers are right now? Good sysadmins and devops use automation and monitoring, but good sysadmins don’t rely on them. The problem with automated monitoring, as we know from the movie Jurassic Park, is that you only find what you’re looking for.

In this article I’ll explain why paranoia, suspicion and snooping are essential items in your sysadmin toolbox, and give you a few hints on how to find trouble you didn’t know you had.

From time to time I log into the various servers under my control, and start snooping around - just looking at what’s going on, and making sure that there aren’t any unexpected things happening, and also that the stuff that should be happening, actually is happening! I look at the process list, the syslog, root’s mailbox, and anywhere else that catches my eye. It’s surprising what kind of problems you can find once you start actively looking for them.

The other night I was looking at the process list on a server, and saw there were several copies of the nightly backup script running. I found that the script was trying to upload the backup tarball to a remote NAS, and as part of this process it tried to delete the previous week’s tarball. This didn’t exist, so the command was failing, but the script wasn’t smart enough to handle this, so just kept retrying. As a result, the backup never got properly copied offsite, and the machine was starting to become overloaded with backup jobs.

Why is this important? Many monitoring systems would not pick up this condition. Would yours? You may want to improve your monitoring to detect at least the following:

Check that the job actually ran, by looking at the modified date on the logfile.

Check that the job terminated (look for a completion message in its log) and check its exit status.

If the job creates a file, check for the existence and the size of this file.

If something gets copied offsite, check the offsite copy and make sure it exists and matches the source file.

You get the idea. No automated monitoring system is perfect. A great way to improve it is to look at your servers with an open mind, and when you find problems you didn’t expect, add monitoring checks to catch them. Jordan Sissel’s article on Cron Practices is a good place to start improving the reliability of your cron jobs.

I found several other little gotchas in the script which needed fixing. It’s easy to assume that a script that’s been in production for years is free of bugs. That’s almost certainly not the case, and many of those bugs won’t be found until you go through the script with a critical eye. If you use version control and test-driven development for your scripts, as recommended by Hugh Brown in his Development for Sysadmins article, this will be a big help.

A good sysadmin is a good detective. You should make time as part of your job to pick on a machine every so often and examine it forensically, like a crime scene. What’s here that shouldn’t be? Are things working like they’re supposed to? Are there any problems with this machine that have gone unnoticed by the automatic monitoring?

I recommend scheduling yourself a regular task at least once a month to inspect each machine that you’re responsible for. It should take 10-15 minutes per machine to just look around and reassure yourself that everything is ticking over nicely. You may want to also use this time to look at what isn’t monitored on the box, and consider adding monitoring for these things. Certainly every time you find a problem, part of the resolution should be to add a monitoring check which would catch this problem if it ever happened again.

If you have a problem justifying this time to your boss, point out that preventative maintenance is much cheaper than - for example - waiting for a disk to fail and then doing emergency repairs, facing possible downtime and even data loss. A single anomalous or mysterious error message in a syslog can be a valuable diagnostic indicator of problems that may be about to become serious.

Here are some places to look:

Logwatch output (ideally, have this mailed to you and read it every day).

Root’s mailbox: errors from cron jobs will come here, as will mail bounces.

The syslog is a great resource, but often very large and hard to read. Try configuring your syslog.conf to send messages from different subsystems (mail, for example) to different logfiles.

The process list (

psortop). What’s running that you don’t expect to see? Is anything using an unusual amount of CPU? Is the machine spending more time in iowait than it should (may indicate a disk problem or a degraded RAID array).The crontab (not all jobs may run as root, so go looking in the

/etc/cron.*directories and/var/spool/cron). What jobs are running? Are they working? Should they be there? Is their output logged and monitored?Places you (and I) wouldn’t think of. Go snooping. See what catches your eye. A detective looks without preconceptions, so she’s ready to spot the unexpected.

I hope the above makes you suspicious and paranoid, in a good way - a way that makes you a better sysadmin. Anything that can go wrong will go wrong, and is going wrong somewhere on your network, right now. Get into the habit of looking for trouble. You’re sure to find it!

A previous version of this article appeared on the SysAdvent web site.